Tutorial 02

André Victor Ribeiro Amaral

The solution can be found here.

Introduction

For this problem set, we will use the following libraries

library(tidyverse)

library(lubridate)

library(zoo)

library(rgeoboundaries)

library(patchwork)

library(viridis)Throughout the tutorial, consider the following scenario. Let \(\{y_{it}; i = 1, \cdots, I; t = 1, \cdots, T\}\) denotes a multivariate time series of disease counts in each Saudi Arabia region, such that \(I = 13\) refers to the number of considered regions and \(T = 36\) to the length of the time series. In particular, we will model \(Y_{it}|Y_{i,(t-1)} = y_{i,(t-1)} \sim \text{Poisson}(\lambda_{it})\), such that \(\log(\lambda_{it}) = \beta_0 + e_{i} + \beta_1 \cdot t + \beta_2 \cdot \sin(\omega t) + \beta_3 \cdot \cos(\omega t)\), where \(e_i\) corresponds to the population fraction in region \(i\) and \(\omega = 2\pi / 12\). Also, we have information about the population size and proportion of men in each region, as well as the number of deaths linked to each element of the \(\{y_{it}\}_{it}\) series.

You can download the .csv file here. And you read the file and convert it to a tibble object in the following way

data <- readr::read_csv(file = 'others/r4ds.csv')## Rows: 468 Columns: 6

## ── Column specification ────────────────────────────────────────────────────────

## Delimiter: ","

## chr (1): region

## dbl (4): n_cases, pop, men_prop, n_deaths

## date (1): date

##

## ℹ Use `spec()` to retrieve the full column specification for this data.

## ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.head(data, 5)## # A tibble: 5 × 6

## date region n_cases pop men_prop n_deaths

## <date> <chr> <dbl> <dbl> <dbl> <dbl>

## 1 2018-01-01 Eastern 33 4105780 0.403 3

## 2 2018-01-01 Najran 23 505652 0.486 1

## 3 2018-01-01 Northern Borders 30 320524 0.666 6

## 4 2018-01-01 Ha’il 36 597144 0.601 3

## 5 2018-01-01 Riyadh 38 6777146 0.559 6The code to generated such a data set can be found here.

Problem 01

Now, to manipulate our data set, we will use the dplyr package. From its documentation, we can see that there are 5 main methods, namely, mutate(), select(), filter(), summarise(), and arrange(). We will use them all.

Start by selecting the

date,region, andn_casesfrom our original data set. To do this, use the pipe operator%>%(inRStudio, you may use the shortcutCtrl + Shift + M).Using

?tidyselect::select_helpers, one may find useful functions that can be combined withselect(). For instance, select all columns that start with “n_”.Aiming to obtain more meaningful sliced data sets, use the

filter()andselect()functions to select the the dates and regions for which the number of cases is greater than 40.Also, select the date, name of the region, number of cases, and number of deaths for which the region is Eastern or Najran

ANDthe date is greater than2019-01-01(you may want to use thelubridatepackage to deal with dates).Now, select the date, name of the region, number of cases, and number of deaths for which the number of cases is larger than

700ORthe number of deaths is equal to10and arrange, in a descending order forn_cases, the results.Next, using the

mutate()function, create a new column into the original data set that shows the cumulative sum for the number of deaths (and name itcum_deaths), and select the number of deaths and this newly created column.Using the

lag()anddrop_na()functions, create a new column namedn_cases_lag2and another one namedn_deaths_lag3that are copies ofn_casesandn_deathsbut with lags 2 and 3, respectively. Then, drop the rows withNAs in then_cases_lag2column and select all but thedateandregioncolumns.Now, the goal is work with the

group_by()function. To do this, group the data set by region, and select the three first columns.Combining a few different functions, select all but the

popcolumn and create a new one (namednorm_cases) that shows the normalized number of cases by date.Using the

summarise()(orsummarize()) function, get the average and variance for the variablesn_casesandn_deaths, as well as the total number of rows.You will notice that the results from the above item do not seem very impressive. However, we can combine

summarize()withgroup_by()to get more meaningful statistics. For instance, obtain similar results as before, but for each region.And as in

SQL, intidyverse, there are also functions that make joining two tibbles possible. From the documentation page, we haveinner_join(),left_join,right_join()andfull_join(). As an example, consider the following data set, which shows the (fake) mean temperature (in Celsius) in almost all regions and studied months in Saudi Arabia. Assuming the previous data set is indata,

set.seed(0)

n <- (nrow(data) - 3)

new_data <- tibble(id = 1:n, date = data$date[1:n], region = data$region[1:n], temperature = round(rnorm(n, 30, 2), 1))

new_data## # A tibble: 465 × 4

## id date region temperature

## <int> <date> <chr> <dbl>

## 1 1 2018-01-01 Eastern 32.5

## 2 2 2018-01-01 Najran 29.3

## 3 3 2018-01-01 Northern Borders 32.7

## 4 4 2018-01-01 Ha’il 32.5

## 5 5 2018-01-01 Riyadh 30.8

## 6 6 2018-01-01 Asir 26.9

## 7 7 2018-01-01 Mecca 28.1

## 8 8 2018-01-01 Tabuk 29.4

## 9 9 2018-01-01 Medina 30

## 10 10 2018-01-01 Qasim 34.8

## # … with 455 more rowsNotice that there is a column named id on new_data. We will need it to link the new tibble to the old one. Thus, let’s create a similar column in data.

data <- data %>% add_column(id = 1:(nrow(data))) %>% select(c(7, 1:6))

head(data, 1)## # A tibble: 1 × 7

## id date region n_cases pop men_prop n_deaths

## <int> <date> <chr> <dbl> <dbl> <dbl> <dbl>

## 1 1 2018-01-01 Eastern 33 4105780 0.403 3Finally, incorporate the new data into the original tibble by using, for example, the left_join() function.

Problem 02

For this problem, we will use ggplot2 to produce different plots.

But before, from the original data set, create an object that contains the columns

date,region,n_men,n_women,n_cases, andn_deaths, such thatn_menandn_womencorrespond to the number of men and the number of women, respectively (to do this, recall thatmen_proprepresents the proportion of men in the population for a given region).Now, the main goal is visualize the studied data set. To do this, we will mainly rely on the

ggplot2library, which is also included in thetidyverseuniverse. Start by creating a line chart for the number of cases in each region (all in the same plot) over the months (when I tried to plot it in myRMarkdowndocument, I had problems with theHa’ilname. If the same happens to you, try to replace it byHail). Mapregiontocolor.When plotting, we can also map more than one property at a time. For instance, plot a dot chart for the number of cases in each region over months that maps

regiontocolorandn_deathstosize.Now, using the function

rollmean()from thezoopackage, plot the number of cases in Mecca (notice that you will have to filter your data set) over time and the moving average (with window \(\text{k} = 3\)) of the same time series.

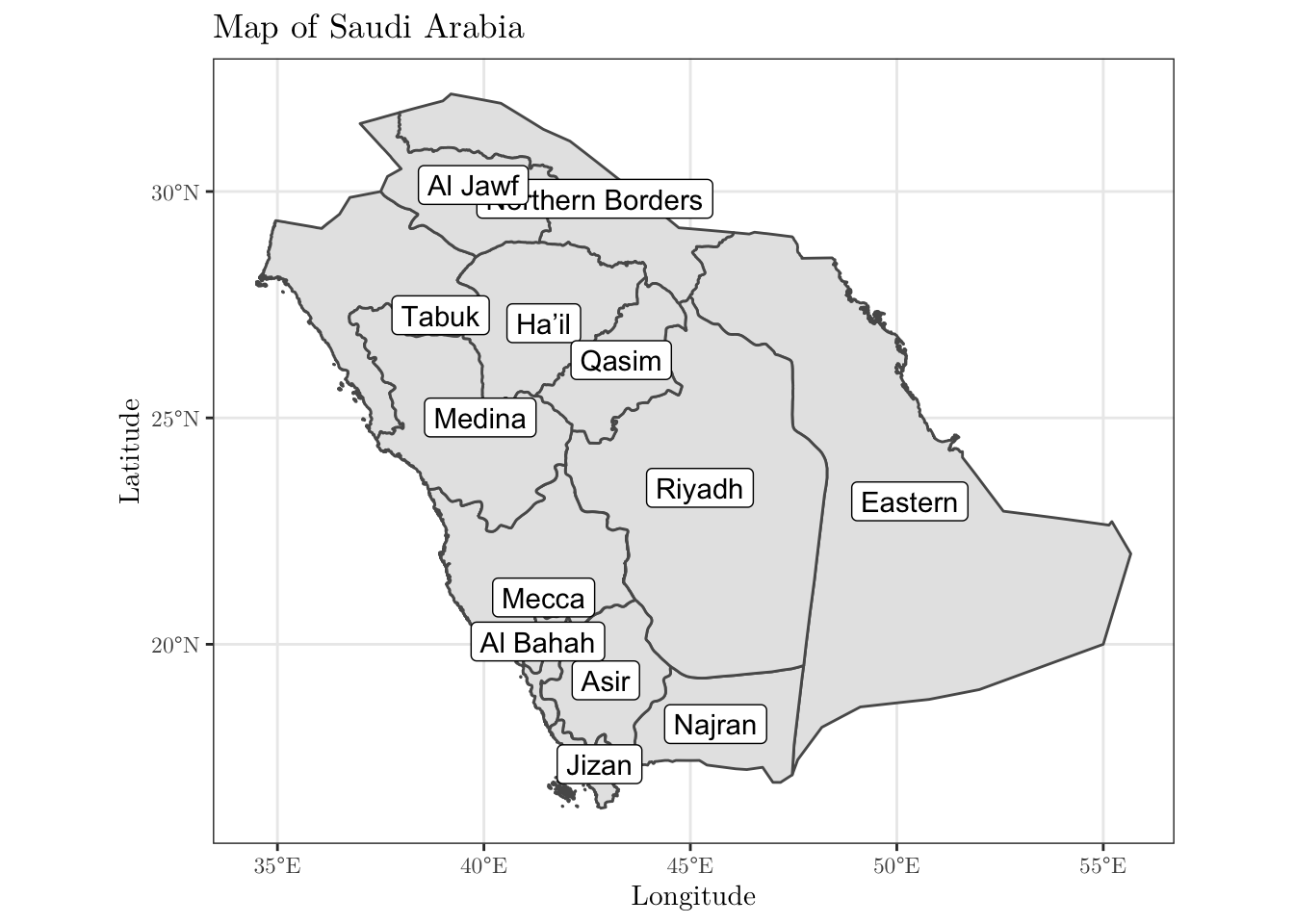

Now, we will plot the map of Saudi Arabia divided into the given regions. To do this, we will need to work with a shapefile for the boundaries of the Kingdom. This type of file may be found online. However, there are some R packages that provide such data. For instance, we can use the geoboundaries('Saudi Arabia', adm_lvl = 'adm1') function from the rgeoboundaries package to obtain the desired data set.

After downloading the data, you can plot it using the ggplot(data) + geom_sf() functions (depending on what you are trying to do, you may have to install (and load) the sp and/or sf libraries).

KSA_shape <- geoboundaries('Saudi Arabia', adm_lvl = 'adm1')

KSA_shape$shapeName <- data$region[1:13]

ggplot(data = KSA_shape) +

geom_sf() +

geom_sf_label(aes(label = shapeName)) +

theme_bw() +

labs(x = 'Longitude', y = 'Latitude', title = 'Map of Saudi Arabia') +

theme(text = element_text(family = 'LM Roman 10'))

- As a final task, plot two versions of the above map side-by-side. On the left, the colors should represent the population size for men in each region; similarly, on the right, the population size for women (to combine plots in this way, you may want to use the

patchworkpackage. And for different color palettes, you may use theviridispackage). Use the same scale, so that the values are comparable.